Extracting Data from Unstructured Text: Complete Guide with NLP, ML & LLMs

IDC estimates that over 80% of global data is unstructured (IDC Report, 2023). This includes emails, social media posts, customer reviews, and business reports. They don’t have standardized formats. Unstructured data complicates analysis.

- Is your business losing insights hidden in emails or customer feedback?

- Can your team quickly analyze hundreds of unstructured documents effectively?

- How are competitors benefiting from unstructured text analytics?

Organizations face issues in turning unstructured text into actionable data. Inefficient manual methods cause errors and lost productivity, costing businesses significantly

Key Takeaways

- Extracting data from unstructured text converts raw text into organized information using NLP and machine learning

- Web scraping and API extraction provide access to online unstructured data sources for analysis

- Data parsing automation reduces manual processing time while improving accuracy across document types

- Real-world applications include customer sentiment analysis, fraud detection, and medical record processing

- Ethical considerations and data quality management are critical for successful text extraction projects

- Modern LLMs like GPT handle complex context interpretation better than traditional extraction methods

- Proper validation and refinement steps prevent inaccurate data from affecting business decisions

What is Unstructured Text Data?

Extracting data from unstructured text involves using various techniques to identify and retrieve specific information from text that doesn’t follow a predefined format. This process transforms raw, free-flowing text into structured data formats for analysis and use.

Common examples of unstructured text include:

- Email communications and customer support tickets

- Social media posts and user-generated content

- Survey responses and feedback forms

- Legal contracts and compliance documents

- Medical records and patient notes

- News articles and research papers

Unlike structured data organized in databases with clear rows and columns, unstructured text contains information scattered throughout paragraphs. Context, meaning, and relationships between data points require advanced processing techniques to identify and extract.

Organizations face significant challenges when dealing with unstructured text. Information exists in various formats, languages, and writing styles. Manual extraction methods prove time-consuming and error-prone, especially when processing large document volumes.

Using data extraction tools improves customer interactions, business decisions and growth opportunities.

Methods & Techniques for Extracting Data from Unstructured Text

Modern data extraction relies on multiple complementary techniques. Each method addresses specific aspects of text processing and information retrieval.

The key methods involved are:

1. Natural Language Processing (NLP)

NLP techniques like tokenization, part-of-speech tagging, named entity recognition, and dependency parsing are used to identify and categorize information within text. These methods break down language into components that machines can understand and process.

Core NLP processes include:

- Tokenization: Breaking text into individual words, phrases, or sentences

- Named Entity Recognition: Identifying people, organizations, locations, and dates

- Sentiment Analysis: Determining emotional tone and opinions within text

- Part-of-Speech Tagging: Classifying words by grammatical roles

Organizations use ML to classify customer feedback, detect emerging trends, or organize legal documents efficiently. Additionally, document classification becomes more simplified because of learning algorithms.

2. Machine Learning (ML)

ML algorithms, including deep learning models, are employed to learn patterns and relationships in text data, enabling the extraction of specific entities, relationships, and other structured information. These systems improve accuracy through training on large datasets.

Machine learning applications:

- Text Classification: Sorting documents into predefined categories

- Topic Modeling: Discovering themes and subjects across document collections

- Pattern Recognition: Identifying recurring structures in text data

- Clustering: Grouping similar documents without predetermined categories

3. Large Language Models (LLMs)

LLMs like GPT and BERT excel at understanding and processing complex text and can be used to extract structured data by providing prompts that define the desired output format. These models handle context interpretation better than traditional methods.

Advanced LLM capabilities:

- Context Understanding: Processing relationships between distant text elements

- Complex Query Processing: Answering specific questions about document content

- Multi-format Output: Generating structured data in various formats

- Domain Adaptation: Learning industry-specific terminology and patterns

4. Web Scraping and API Extraction

Data can be extracted from websites or other online sources using web scraping techniques and APIs, which can provide access to structured data or require further processing to extract information. This method accesses real-time data from external sources.

Web scraping techniques:

- HTML Parsing: Extracting text from web page structures

- API Integration: Accessing structured data through programming interfaces

- Content Monitoring: Tracking changes in online text sources

- Rate Limiting: Managing extraction speed to avoid service restrictions

5. Optical Character Recognition (OCR)

OCR is used to convert images of text into machine-readable text, making it possible to extract information from scanned documents or images. Modern OCR systems handle various fonts, languages, and document qualities.

OCR processing stages:

- Image Preprocessing: Cleaning and preparing scanned documents

- Character Recognition: Converting visual text into digital characters

- Layout Analysis: Understanding document structure and formatting

- Quality Validation: Checking accuracy of converted text

6. Data Parsing Automation

Tools and techniques can automate the process of parsing unstructured data, identifying key elements, and transforming them into a structured format. Automation reduces manual effort while maintaining consistency across processing tasks.

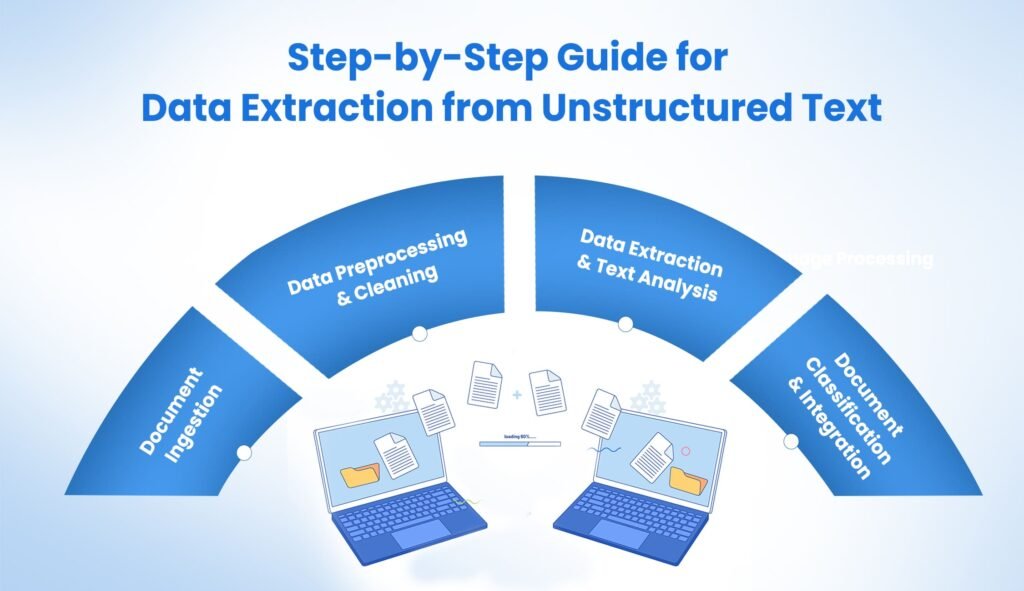

Step-by-Step Guide for Data Extraction from Unstructured Text

Data extraction is a process applicable to wide range of document automations. But the core process of extracting data from documents remains same. Whether you want to extract text from image or pdf – extraction requires structured steps:

Step 1: Document Ingestion

Documents enter automated systems through databases, file systems, or APIs. Secure and standardized document ingestion ensures seamless subsequent processing. Web scraping is often employed to gather data from online sources efficiently.

Step 2: Data Preprocessing & Cleaning

Preprocessing includes removing irrelevant text, normalizing data formats, and using OCR for image-based text. Techniques like regular expressions and data parsing automation simplify data extraction, ensuring accuracy and readiness for analysis.

Step 3: Data Extraction & Text Analysis

Using NLP and ML, critical data is extracted, such as keywords, entities, and sentiments. Analyzing text in this step generates structured data for decision-making. Intelligent document processing tools rely on text analysis to train their learning models.

Step 4: Document Classification & Integration

Extracted data undergoes classification into predefined categories. Integration into business blueprints creates automated decisions and helps boost productivity.

Top Tools & Platforms for Extracting Unstructured Text Data

Tools significantly enhance extraction efficiency:

NLP Libraries (spaCy, NLTK)

NLP libraries like spaCy and NLTK perform preprocessing, entity recognition, sentiment analysis, and tokenization efficiently, simplifying complex extraction tasks.

Machine Learning Frameworks (TensorFlow, PyTorch)

Frameworks like TensorFlow and PyTorch help build predictive models for categorization and analysis. These models can be customized to the specific needs of organizations.

Cloud-based Solutions (Amazon Comprehend, Google NLP)

Cloud platforms provide scalable NLP solutions, supporting entity extraction, topic modeling, and sentiment analysis, enabling businesses to process vast amounts of text data rapidly.

Specific Applications and Industries

Different industries leverage unstructured text extraction to solve unique business challenges and improve operational outcomes.

Finance: Advanced Risk Management

Financial institutions use text extraction for fraud detection, risk assessment, and compliance monitoring. Banks analyze transaction descriptions, customer communications, and regulatory filings to identify suspicious patterns.

This resulted in:

- 40% reduction in fraud detection time

- Improved compliance reporting accuracy

- Better risk assessment through customer communication analysis

Healthcare: Patient Care Optimization

Patient record analysis, diagnosis improvement, and treatment plan optimization benefit from structured data extraction. Healthcare providers process clinical notes, test results, and patient feedback to improve care quality.

Consequences included:

- Faster diagnosis through comprehensive record analysis

- Reduced medical errors from incomplete information

- Better treatment outcomes through data-driven decisions

Retail: Customer Intelligence

Customer sentiment analysis, product trend identification, and targeted marketing campaigns rely on processing customer reviews, social media mentions, and survey responses.

Key outcomes achieved:

- 25% improvement in customer satisfaction scores

- More accurate product development decisions

- Increased marketing campaign effectiveness

Legal: Document Processing Acceleration

Contract analysis, case research, and legal document review require extracting specific clauses, dates, and legal entities from complex documents. Law firms process contracts faster while reducing oversight risks.

Benefits of Extracting Data from Unstructured Text

Organizations implementing text extraction solutions experience measurable improvements across multiple business areas.

Improved Decision Making

Structured data provides a clearer picture of information, enabling more informed decisions. Leaders access comprehensive insights rather than relying on incomplete manual analysis.

Increased Efficiency

Automated data extraction reduces manual effort and frees up resources for other tasks. Teams focus on analysis and strategy instead of data collection and processing.

Competitive Advantage

Deeper insights into customer behavior and market trends lead to better products and services. Companies respond faster to market changes and customer needs.

Cost Reduction

Efficient data extraction can reduce operational costs. Organizations save money on manual processing while improving data accuracy and completeness.

Processing thousands of documents manually costs organizations significant time and resources. Automated extraction systems handle large volumes consistently while reducing human error rates.

Steps Involved in the Process

Successful data extraction follows a systematic approach that ensures accuracy and reliability across different document types and data sources.

The extraction process includes these steps:

1. Identify Data Sources

Determine the sources of unstructured text data relevant to your needs. Sources include internal documents, external websites, social media platforms, and customer communication channels.

2. Choose Extraction Methods

Select the appropriate NLP, ML, or other techniques based on the type of data and desired output. Consider factors like data volume, complexity, and required accuracy levels.

3. Preprocess the Text

Clean and prepare the text data using NLP techniques like tokenization, stemming, or lemmatization. Remove noise, standardize formats, and handle special characters or encoding issues.

4. Extract Structured Data

Apply the chosen methods to identify and extract the desired information. Use named entity recognition, pattern matching, or machine learning models to find specific data points.

5. Validate and Refine

Ensure the extracted data is accurate and refine the process as needed. Check results against known examples and adjust extraction parameters to improve performance.

6. Store or Analyze the Data

Store the extracted data in a structured format for further analysis or use in other applications. Integrate results into existing systems or analytical workflows.

Each step requires careful attention to maintain data quality and extraction accuracy. Organizations often iterate through these steps to optimize results for specific use cases.

Challenges and Considerations

Organizations face several obstacles when implementing unstructured text extraction systems. Understanding these challenges helps teams prepare appropriate solutions.

Data Quality Issues

Ensuring the accuracy and consistency of extracted data is crucial. Poor input data quality affects extraction results and downstream analysis reliability.

Common quality problems include:

- Inconsistent formatting across documents

- OCR errors in scanned documents

- Incomplete or corrupted text files

- Multiple languages within single documents

Complexity Challenges

Unstructured text can be complex and require advanced NLP and ML techniques. Simple rule-based systems fail when processing diverse document types or handling context-dependent information.

Scalability Requirements

Extracting data from large volumes of text can be computationally intensive. Organizations need systems that handle growing data volumes without performance degradation.

Ethical Considerations

Data privacy and security must be addressed when handling sensitive information. Companies must comply with regulations like GDPR while protecting customer and employee data.

Ethical guidelines include:

- Obtaining proper consent for data processing

- Implementing secure data handling procedures

- Anonymizing personal information when possible

- Regular audits of data usage and access

These challenges require ongoing attention and specialized expertise. Organizations benefit from choosing established solutions rather than building extraction systems from scratch.

Top Tools and Platforms

Modern extraction projects rely on proven tools and platforms that handle different aspects of text processing and analysis.

Handling large data volumes requires scalable tools. Utilizing cloud-based platforms and automated processes easily manages increasing data volumes. IDP solutions can not only scan large volumes of documents but also process them in quick-time.

NLP Libraries and Frameworks

spaCy and NLTK provide comprehensive text processing capabilities including tokenization, entity recognition, and sentiment analysis. These libraries support multiple languages and offer pre-trained models for common tasks.

Key features:

- Pre-trained language models for immediate use

- Custom model training capabilities

- Integration with popular programming languages

- Active community support and documentation

Machine Learning Platforms

TensorFlow and PyTorch enable building custom models for specific extraction needs. These frameworks support deep learning approaches that handle complex text patterns and relationships.

Cloud-Based Solutions

Amazon Comprehend and Google Cloud Natural Language offer scalable processing without infrastructure management. These services handle large document volumes while providing APIs for easy integration.

Service advantages:

- Automatic scaling based on processing demands

- Pay-per-use pricing models

- Regular updates and improvements

- Built-in security and compliance features

Organizations often combine multiple tools to create comprehensive extraction workflows. The choice depends on technical requirements, budget constraints, and internal expertise levels.

Compliance and Security Risks

Extracting sensitive data creates compliance risks. Dynamic security practices and sticking to regulatory standards reduces potential legal and reputational risks.

Linguistic complexities, domain-specific terminologies, and context interpretation issues present additional extraction challenges. Specialized NLP tools and domain-trained ML models can address these issues with focused fixes.

Why Should You Choose KlearStack?

Extracting data from unstructured text needs precision and flexibility. KlearStack simplifies this process for organizations processing vast document volumes daily.

Solutions Offered:

- Template-free data extraction adaptable to various document types

- Self-learning algorithms improve extraction accuracy continuously

- Real-time analytics and hassle-free integration with existing systems

- Top-notch security and compliance (GDPR, DPDPA standards)

Proven Business Impact:

- Achieve up to 99% data extraction accuracy

- Reduce document processing costs by 85%

- Increase operational productivity by 500%

Ready to simplify unstructured data extraction? Book your Free Demo now!

Conclusion

Businesses using methods like text mining, NLP, OCR, and machine learning algorithms can turn vast amounts of unstructured data into good information. Extracting data from unstructured text has many benefits, including:

- Reduced manual effort and errors

- Improved planning through quality data

- Better regulatory compliance and lower risks

- Broader business intelligence capabilities

Industries such as finance, healthcare, and legal services benefit in customer interactions, fraud detection, and compliance monitoring. Good extraction tools like KlearStack impact profitability and competitiveness.

Adopting effective data extraction practices is now essential, not optional.

FAQ’S on extracting data from unstructured text

Extracting data from unstructured text involves converting raw text into structured formats. It uses NLP, machine learning, and OCR technologies for processing.

Finance, healthcare, retail, and legal sectors benefit significantly. These industries use extraction for customer insights, compliance monitoring, and operational improvements.

NLP identifies context and relationships within text data effectively. Machine learning algorithms recognize patterns and improve accuracy through training datasets.

Popular tools include spaCy for NLP processing, TensorFlow for machine learning, and cloud services like Amazon Comprehend.