Extracting Information from Unstructured Text Using Algorithms

There are times when you are confronted by unstructured documents that contain a lot of relevant and irrelevant information, and you have to scan through all of it yourself to extract the most relevant parts. Imagine if this tedious task could be automated such that you become capable of extracting only desirable information from a document without having to work at all.

With Artificial Intelligence influencing almost all spheres of life today, this dream of high-quality Information extraction from documents has now become a reality with unstructured data extraction tools.

Analyzing textual data involves developing an understanding of the context, finding a link between different textual entities, and sometimes discovering certain parts in a long document that you feel conveys the crux of the whole document very effectively.

With Artificial Intelligence-based Information Extraction Tools, this kind of data analysis, which only the human brain is capable of conducting, can be mimicked to a large extent.

In this article, let us learn what is the process of analyzing data to extract information, and how it can improve the efficiency of the workflow in a variety of settings.

Must-Read Articles

Methodology for Information Extraction

Information Extraction from documents using artificial intelligence tools has been an area of extensive research.

Three methodologies that have been used for information extraction are:

Rule-based Method

As the name suggests, the rule-based method utilizes specific rules for extracting the text out of a given document. Rule-based information extraction is most commonly used for semi-structured web pages. To use the rule-based method for information extraction, the system should first learn the rules of the syntactic or semantic barriers of the given text. It basically means learning the rules that can help in identifying the boundaries of the given text.

Two procedures that are used for the rule-based information extraction approach are the bottom-up method and the top-down method. In the bottom-up method, the rules governing the boundaries of the content are learned in a specific direction, going from the special cases to the general cases. The direction in the top-down method is opposite to that of the bottom-up method.

Classification-based Method

The second principled approach of information extraction, based on supervised machine learning models, is called the Classification-Based Methodology. The primary objective of this methodology is to treat information extraction as a classification task. The implementation of this shift from extraction to classification occurs in two phases. These phases are the learning and the extraction phases, respectively.

In the learning phase, pre-existing labeled documents are used to generate new models which form the basis of future predictions. During the extraction phase, the same learned models are utilized to label new unlabelled documents.

The formalization of Information Extraction as a Classification task is the starting point for the detection of content boundaries. In the classification model, the basic unit for Information Extraction is called a Token. Moreover, for the extraction phase to get completed, algorithms called classifiers are used.

Sequential Labelling-Based Methods

Just like classification, Information Extraction can also be taken up as a task of sequential labeling. Here also, each basic unit of extraction is called a token, and by taking up one token at a time, specific labels are attached to them so that one can identify what each token indicates.

Sequential Learning is the only method between these three popular principles that actually describes the dependencies in the source information. It is an important differentiating factor because it is observed that with proper dependency data, the results of information extraction can be made more accurate and satisfying.

NOTE:

Several hybrid models have now been developed which work better than any single approach like Rule-based, Classification-based, or Sequential Labelling-Based Methods. These hybrid models are combinations of these approaches with other techniques like the Hidden Markov Model (HMM). These methodologies can also help you learn how to extract data from a website into Excel.

Role of NLP in Information Extraction- The Case Of Named Entity Recognition

Named Entity Recognition is a technique of Natural Language Processing that facilitates Information Extraction. It is basically linked to the extraction of descriptive information such as locations, names, organizations, dates, etc.

The fact that named entity recognition is extremely helpful in extracting information about general entities like places, foods, names, etc., and even domain-specific entities like football, goalkeeper, defender, etc. makes it a very versatile method for information extraction. Supervised, unsupervised, or semi-supervised approaches can be used for Named Entity Recognition using Natural Language Processing platforms.

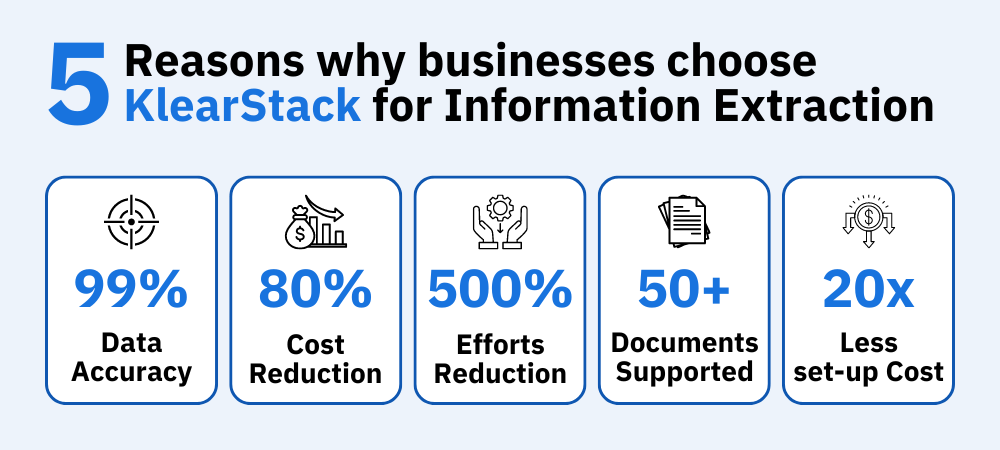

KlearStack: Effortless Information Extraction From Documents

By leveraging the benefits of Natural Language Processing, Machine Learning, and Computer Vision, KlearStack has created a robust solution for Information Extraction from documents. Not just restricted to the extraction of required data, our solutions also undertake interpretation of the text to provide error-free outputs to the end-user.

KlearStack also enables highly accurate data extraction to take place from unseen template-free documents. For documents that have never been processed through KlearStack before, data from it can be extracted with 20-30% higher accuracy compared to any other data extraction solution.

With the rising chunk of unstructured documents in the professional world, an Information Extraction solution like KlearStack, which requires no templates whatsoever, can boost your operations and add relevance to your work with precise and high-quality extraction results.

Schedule a demo with our experts today to learn more about our solution.