Data extraction means gathering data from various sources and moving it to a centralized location for analysis and storage. According to Gartner, organizations lose approximately $15 million annually due to poor data management. Companies today face a critical challenge — efficiently extracting and using this vast amount of information for insightful business decisions.

- Are you struggling to consolidate scattered data from multiple platforms?

- Do you frequently encounter data quality issues?

- Is the manual handling of large datasets affecting your productivity?

The extraction of data is crucial for transforming raw data into actionable insights, ensuring informed decisions, accurate analytics, and enhanced operational performance.

What is Data Extraction?

Data extraction is the process of gathering and moving data from various sources like databases, APIs, or websites to a single location for analysis and storage. It simplifies complex, scattered information, making data more accessible and structured for further use.

This process often involves pulling key information from sources such as documents, web pages, PDFs, spreadsheets, and emails. By converting this data into structured formats, organizations streamline data-driven decision-making.

Common Sources of Data Extraction

Data is typically extracted from sources including:

- Databases (SQL, NoSQL)

- Spreadsheets (Excel, Google Sheets)

- Websites (Web Scraping)

- APIs (Application Programming Interfaces)

- Emails

- SaaS Platforms (Salesforce, HubSpot)

- Custom Internal Systems

These sources contain valuable insights businesses require for strategic planning and operations.

Importance of Data Extraction

In business operations, the importance of data extraction cannot be overstated. Accurate and reliable data is paramount for achieving meaningful results and driving informed decision-making. It has the following importance:

- Data extraction ensures the reliability and usability of data by transforming scattered information into a structured format that is easily analyzed and interpreted.

- By simplifying complex data and extracting key insights from various sources, including documents, web pages, and PDFs, data extraction enables businesses to access valuable information that would otherwise be inaccessible or overlooked.

- This process enhances the efficiency of data analysis and facilitates strategic planning, operational optimization, and targeted marketing efforts.

- Data extraction enables access to valuable data from diverse sources and overcoming language barriers. By translating texts published in different languages, businesses can make use of insights from a global data pool, enabling them to gain a competitive edge.

- Data extraction helps analytics and business intelligence tools to access new data sources, providing organizations with insights that facilitate sustainable growth.

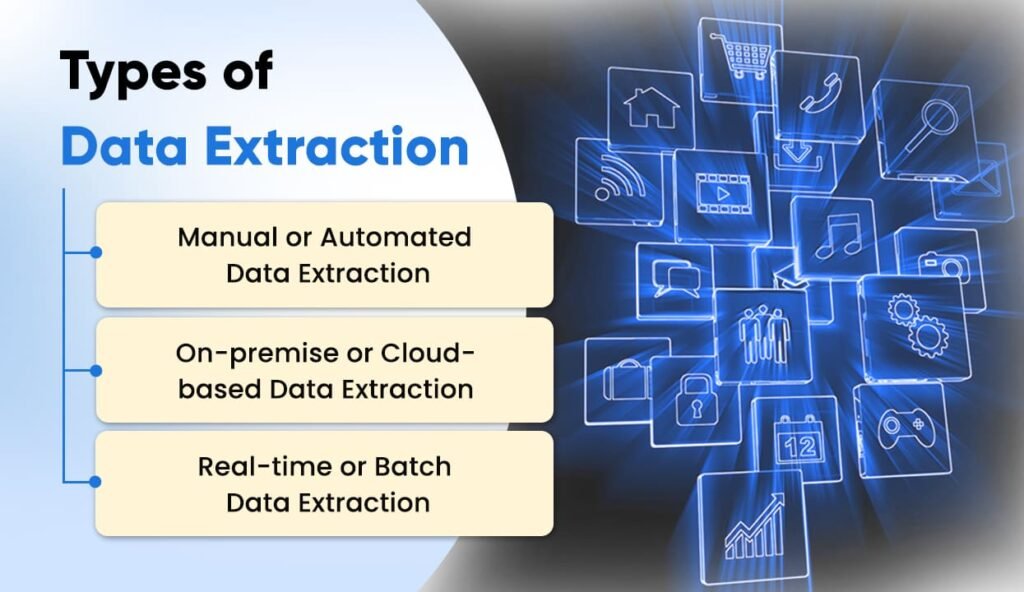

Types of Data Extraction

Data extraction methods can be categorized into three primary types:

Full Extraction

This method involves retrieving all data available from the source. Ideal for initial data migrations, it ensures completeness and accuracy but can be resource-intensive.

Incremental Extraction

Incremental extraction captures only data changes since the last extraction. This approach reduces system load and is ideal for regularly updated datasets.

Web Scraping

Web scraping automates the extraction of data from websites. It’s essential for gathering data not directly accessible via traditional APIs or databases.

Overview of Popular Data Extraction Tools

Popular data extraction tools offer efficient ways to gather and change unstructured data. They change it into structured formats for analysis.

KlearStack

KlearStack allows users to extract information from unstructured and semi-structured documents with ease and high precision. What makes it unique is its template-less feature.

Import.io

Simplifies web data extraction, turning unstructured data into structured formats.

Octoparse

Provides a user-friendly interface for scraping dynamic websites efficiently.

Parsehub

Specializes in extracting data from JavaScript and AJAX pages, integrating it into various applications.

OutWitHub

Offers sophisticated scraping functions with an intuitive interface.

Web Scraper

Automates data collection processes and saves data in multiple formats like CSV, JSON, etc.

Mailparser

Extracts data from emails and files, automatically importing it into Google Sheets for easy access and analysis.

Data Extraction and the ETL Process

Data extraction serves as the first step in the Extract, Transform, Load (ETL) process:

- Extraction: Data is collected from various sources.

- Transformation: Data is cleaned and formatted for usability.

- Loading: Prepared data is moved to a storage destination, typically a data warehouse.

A clear understanding of ETL helps organizations effectively leverage their data assets.

Data Extraction Techniques

Data extraction involves getting data from various sources. Several techniques can be used. The choice depends on the type of the data and the source.

Here are the main techniques for data extraction:

Association: This technique finds and pulls out data. It does this based on the relationships and patterns between items in a dataset. It uses parameters like “support” and “confidence” to find patterns that help in extraction.

Classification: It is a widely used method. Data is put into predefined classes or labels using predictive algorithms. Models are then trained for classification-based extraction.

Clustering: This unsupervised learning technique groups similar data points into clusters. It does this based on their characteristics. It is often used as a step before other data extraction algorithms.

Regression: Regression models relationships. It does this between independent variables and a dependent variable in a dataset.

Use Cases of Data Extraction in Various Industries

Alpine Industries, a leading manufacturer, faced a task, where they extract data from PDF documents daily. The in-house team of the company was responsible for processing these documents manually into their ERP (Enterprise Resource Planning) system.

The task was time consuming and impacting employees productivity. To overcome this challenge, Alpine Industries introduced a comprehensive data management platform to streamline the entire data process.

Similar like Alpine, there are multiple other sectors that have simplified their data extraction processes through automation:

Retail: Retailers can extract pricing data from competitors’ websites. This data allows for strategic price adjustments. These adjustments improve competitiveness and profitability.

Healthcare: Gathering patient feedback from online sources improves care. It helps by finding areas to improve.

Finance: Collecting market data helps make better investment decisions. It also aids in portfolio optimization for banks.

E-commerce: Analyzing customer behavior guides product offerings and marketing strategies, driving sales.

Challenges and Considerations in Data Extraction

Extracting data is a neccessity. But, despite advancements, many issues make it hard for businesses:

1. Data Diversity

Managing various data formats and structures poses a tiringchallenge in data extraction. Sources may use different formats. For example, CSV, JSON, and XML. They may also use different structures. For example, relational databases and NoSQL databases.

This requires good extraction processes to handle the diversity well.

2. Quality Assurance

Ensuring data accuracy, completeness, and consistency is crucial for reliable analysis and decision-making. But, getting data from many sources raises the risk of errors. For example, data can be missing or wrong. We must implement quality assurance measures.

They will validate and clean extracted data to keep it reliable.

3. Scalability

Efficiently handling large data volumes is useful. Data volumes continue to increase with evolution of tech.. These tasks need scalable infrastructure and optimized processes. This is to prevent bottlenecks and ensure timely data delivery. They involve extracting, processing, and managing massive datasets.

4. Security and Compliances

Following data standards is another neccessity. This includes regulations for data extraction. These requirements, like GDPR, HIPAA, and PCI DSS, have strict rules. They cover handling sensitive data. Protecting sensitive information during data extraction is important. It prevents unauthorized access, data breaches, and privacy violations.

This helps in reducing legal and reputational risks from non-compliance. Implementing strong security should include encryption, access controls, and secure protocols.

5. Legacy System Integration

It’s hard to combine old and new tech in data extraction. Legacy systems may use old or proprietary formats and interfaces. This makes integrating them with modern extraction tools and platforms hard and slow. Overcoming compatibility issues is very important.

You must ensure smooth integration to extract data from legacy systems well.

6. Budget Constraints

Businesses must balance costs with the need for effective extraction. Buying strong extraction tools, infrastructure, and security can cost a lot. This is especially true for small and medium-sized enterprises with limited budgets. Finding affordable solutions ensures profit.

They must meet data extraction needs without sacrificing quality and security.

Why Should You Choose KlearStack?

Organizations require accurate and efficient data extraction solutions, and KlearStack fulfills these needs with unique capabilities:

Solutions That Matter:

- Template-free data processing, adaptable to all document types.

- Self-learning algorithms that continuously enhance accuracy.

- End-to-end automation minimizing manual efforts.

Proven Performance:

- Accuracy: Achieve up to 99% data extraction accuracy.

- Cost Savings: Reduce manual data entry costs by 80%.

- Scalable Processing: Easily manage high volumes of data daily.

KlearStack offers secure, compliant document handling to meet diverse business requirements. Ready to enhance your data extraction capabilities? Book a free demo today.

Conclusion

Data extraction techniques retrieve and combine information from many sources. This enables analysis, manipulation, and storage for many purposes. It is broadly divided into automated and manual extraction methods. It uses tools like SQL for relational databases.

It is the first step in the ETL process. Data extraction makes data handling efficient. This ensures accurate and timely insights for informed decisions.

Key takeaways include:

- Significant improvement in data quality.

- Enhanced strategic decision-making capabilities.

- Cost-effective and efficient analytics.

As discussed earlier, there are many extraction techniques. You can take your pick based on your specific set of requirements.

Using effective data extraction methods can lead to substantial operational and financial benefits.